CHANNELS

Features

User Acceptance Testing (UAT) refers to validation tests carried out by the end users of a digital product before it is deployed in production. Also known as user acceptance testing or beta testing, UATs verify that the developed software, application or website meets the business needs and expectations of the users who will be using it on a daily basis. This testing phase takes place after all technical features have been validated by the development teams, thus constituting the final validation step before the product is released to the market.

In an ever-changing digital world, taking the user experience into account has become essential to the success of a project. UAT represents this final and crucial phase of the software development life cycle (SDLC) that places the user at the center of the validation process. Unlike technical tests, which verify that the code is working properly, UAT ensures that the product is intuitive, useful and acceptable to those who will be using it.

UAT is the last line of defense before an application, website or software is released to the market. This approach ensures that the product not only works technically, but also meets the real expectations of those who will use it on a daily basis. By directly involving end users in the final validation phase, companies ensure that the product delivers on its promises in real usage conditions, taking into account actual habits, environments, and constraints, while reducing the risk of user friction and heavy fixes after production release.

Summary

1.

UAT vs other types of testing

2.

Why implement UAT?

3.

Key players in UAT testing

4.

Methodology : how to perform a UAT test

5.

Different types of UAT tests

6.

Best practices for optimizing UATs

7.

Automation of UAT testing

8

The strategic impact of UAT on product success

9.

10.

It is essential to understand the fundamental difference between UAT and technical compliance testing. Compliance testing, carried out by developers and functional testers, verifies that the product meets the technical specifications defined in the specification document. It requires in-depth technical knowledge and can be performed at each key stage of development.

UAT, on the other hand, evaluates the actual user experience. It focuses primarily on the end customer and is performed only once, constituting the final stage before deployment. A product can pass all technical compliance tests perfectly and still fail UAT testing. For example, an application may work perfectly according to specifications, but its controls may prove unintuitive for end users.

This complementarity of approaches is crucial: technical testing ensures that the code works properly, while UAT testing ensures that the product meets the real needs of users in their everyday context.

One of the primary benefits of UAT is that it provides perspective. Development teams, focused on technical aspects for months, can lose sight of the user's perspective. UAT allows them to step back from this technical view and evaluate the product as a whole, from the perspective of those who will actually use it.

Early correction of defects represents a major economic advantage. The cost of fixing a bug after large-scale deployment can be up to 100 times higher than before launch. UAT testing identifies and corrects issues that were not detected during technical testing phases, thus avoiding costly post-deployment corrections.

Improved customer satisfaction is directly linked to the success of UAT testing. By involving end users in the validation process, the company ensures that the product truly meets their expectations. This approach strengthens user confidence and significantly improves the company's brand image.

The final quality assurance provided by UAT acts as a last filter before production. This step captures usability, real-world performance and integration issues that may have been missed during technical testing.

Reducing business risk is an often underestimated aspect of UAT testing. By detecting problems before launch, the company avoids potential damage to its reputation, loss of customers, and excessive customer support costs. UAT testing thus protects the company's investment and secures the expected return on investment (ROI) from the project.

Functional experts play an important technical role in UAT testing. They have a deep understanding of how the product works and can guide users during testing. However, their in-depth knowledge can also be a bias, preventing them from seeing the product through the eyes of a new user.

Business and end users are the real protagonists of UAT testing. They are the ones who will use the product on a daily basis and who know exactly what their operational needs are. Their participation is essential to validate that the product meets the real requirements in the field.

Business analysts (or business analysts in the IT context) facilitate communication between technical teams and users. They translate business needs into functional specifications and ensure that the tests cover all critical aspects of the product.

Product owners and managers provide the strategic vision for the product. They ensure that UAT tests validate not only the functionality, but also the alignment with the business objectives of the company.

Subject-Matter Experts (SMEs) contribute their in-depth knowledge of the application domain. In specialized sectors such as finance or healthcare, their expertise is crucial for validating that the product complies with industry standards and practices.

The customer support team offers a unique perspective based on their knowledge of recurring issues encountered by users. Their participation helps to anticipate future questions and difficulties.

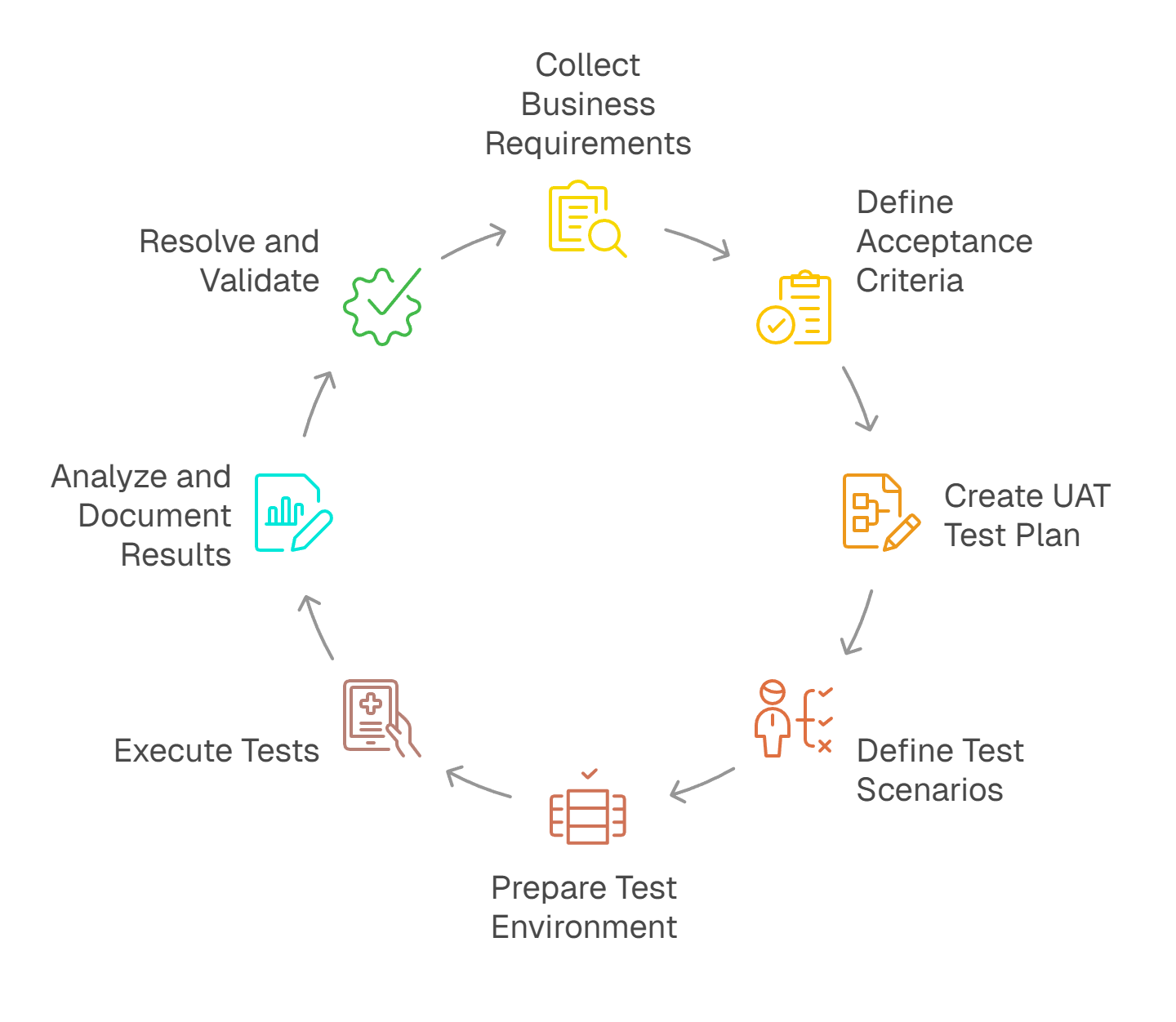

The collection of business requirements forms the basis of UAT testing. This phase involves precisely identifying the key features to be validated, the user roles involved and the critical usage scenarios. Acceptance criteria must be clearly defined and measurable to avoid any ambiguity during evaluation.

Creating a UAT test plan structures the entire process. This document details the objectives, scope, test strategy, and necessary resources. It also defines the success criteria and the conditions for validating or invalidating the tested features.

Defining test scenarios transforms business requirements into concrete actions. Each scenario describes a sequence of actions that the user must perform, with the expected results. For example, for an e-commerce site: the user adds items to the basket, proceeds to payment using different methods (credit card, PayPal), and receives an order confirmation. The scenarios must cover normal use cases and edge cases.

Preparing the test environment and data ensures reliable results. The test environment must accurately reproduce production conditions, including server configurations, third-party integrations, and realistic data volumes. Test data must be representative and anonymized to comply with data protection regulations.

The execution of tests involves all participants in a structured process. Tests can be conducted in person in a dedicated room or remotely via collaborative platforms. Each tester follows the defined scenarios and documents their observations, any anomalies encountered, and suggestions for improvement.

The analysis and documentation of results transforms raw feedback into actionable insights. Anomalies are categorized according to their severity and impact on the user experience. A detailed report summarizes the results, identifies trends and proposes prioritized recommendations.

The resolution and final validation closes the testing cycle. The development teams correct the identified anomalies according to their priority. Each correction is subject to further validation by users to confirm that the problem has been resolved without creating regression.

Alpha tests are conducted internally with the development team and a small group of selected users. This approach allows major bugs to be identified quickly in a controlled environment. Alpha tests offer the advantage of immediate feedback and direct communication between testers and developers. They allow teams to fix critical issues before exposing the product to a wider audience, reducing the risk of a bad first impression.

Beta testing involves a wider audience of external users in real-world conditions. This phase reveals how the product performs in the face of diverse configurations and uses. Feedback from beta testers provides varied perspectives and highlights issues that are impossible to detect in a controlled environment. Companies often use structured beta testing programs, sometimes offering benefits to volunteer testers to encourage their active participation and detailed feedback.

Contractual testing verifies that the product meets the specific commitments defined in the contract between the customer and the supplier. Each contractual requirement is subject to formal validation. Any discrepancies identified must be resolved before final acceptance of the product. These tests are particularly critical in B2B projects where financial penalties may apply for non-compliance with agreed specifications.

Regulatory testing ensures compliance with applicable laws and standards. In highly regulated sectors such as healthcare, finance and aviation, these tests are mandatory and critical. In particular, they verify compliance with data security (GDPR), accessibility (WCAG) and traceability standards specific to the sector. Failure of these tests can result in significant legal penalties and prohibit the product from being marketed, making this phase absolutely essential for the companies concerned.

Meticulous planning is the foundation of a successful UAT. Precisely defining the typical customer allows you to recruit truly representative testers. The sample must be sufficiently large and diverse to obtain statistically significant results. The scope of the test must be clearly defined to avoid wasting effort on non-critical features.

During execution, the central role of users must be respected. Testers must be able to freely express their impressions, even if they are negative. The use of high-performance collaborative platforms facilitates real-time communication between all participants, particularly for remote testing. These tools must allow for the assignment of rights according to roles, the secure recording of exchanges, and the instant sharing of observations.

Rigorous documentation and reporting ensure complete traceability of the process. Each test, result, and decision must be documented in a structured and accessible manner. Prioritizing anomalies according to an impact/urgency matrix allows development teams to focus their efforts on the most critical corrections.

The continuous involvement of stakeholders maintains alignment throughout the process. Regular updates allow progress to be shared, problems encountered to be discussed, and the strategy to be adjusted if necessary. This transparent communication avoids surprises and facilitates decision-making.

Automation brings significant gains in efficiency and speed. Automated scripts can run hundreds of test cases simultaneously, reducing testing time from weeks to hours. This acceleration enables shorter validation cycles and faster time to market.

The consistency and accuracy offered by automation eliminate variations due to human factors. Each test is executed in exactly the same way, ensuring reproducible and comparable results. This standardization makes it easier to identify regressions between versions.

The scalability of automation makes it possible to test complex applications without a proportional increase in resources. Automated tests can cover thousands of combinations of data and user journeys that would be impossible to test manually within a reasonable timeframe.

However, automation has its significant limitations. Subjective aspects of the user experience such as ergonomics, aesthetics, and intuitiveness require human judgment. The balance between manual and automated testing is crucial : automation for repetitive and objective tests, manual testing for qualitative evaluation of the user experience.

UAT is an essential bridge between development and production, ensuring a smooth and confident transition. This final validation phase not only secures the technical quality of the product, but also its suitability for the market and the real needs of users.

The impact on commercial success is direct and measurable. Products that have undergone thorough UAT testing have higher adoption rates, fewer support tickets and better user retention. The cost of UAT testing, estimated at between €5,000 and €50,000 depending on the complexity of the project, is more than offset by the savings made by avoiding post-production corrections and the loss of dissatisfied customers.

The future of UAT testing is moving towards greater integration of artificial intelligence to predict user behavior, the use of virtual reality to simulate complex usage environments, and the development of increasingly sophisticated collaborative testing platforms. UAT testing is thus becoming a strategic competitive advantage for companies that master this discipline, enabling them to deliver products that not only work, but delight their users.